Introduction to Deep Learning: What Beginners Need to Know

In the ever-evolving landscape of artificial intelligence (AI), deep learning stands out as a transformative technology driving innovations from self-driving cars to virtual assistants. A subset of machine learning, deep learning enables computers to learn from vast amounts of data using artificial neural networks inspired by the human brain. Its ability to process complex patterns has made it a cornerstone of modern AI, powering applications that seemed like science fiction just a decade ago. According to a 2024 MarketsandMarkets report, the deep learning market is projected to grow to $135 billion by 2030, reflecting its impact across industries.

For beginners, deep learning can seem daunting, with its technical jargon and mathematical underpinnings. However, with the right foundation, anyone can grasp its core concepts and start exploring this exciting field. This article serves as an accessible introduction to deep learning, breaking down its basics, showcasing real-world applications, and outlining the prerequisites needed to dive in. Whether you’re an aspiring data scientist or simply curious about AI, this guide will equip you with the knowledge and confidence to begin your deep learning journey.

What is Deep Learning?

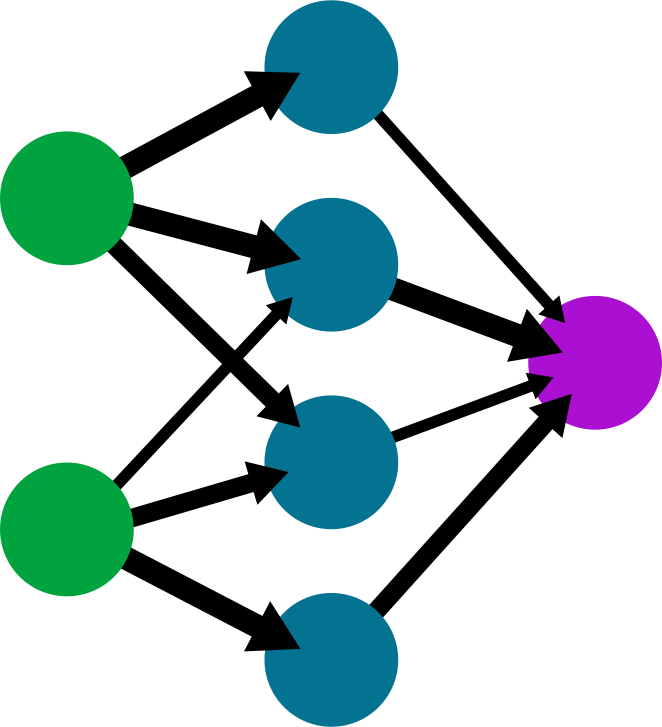

Deep learning is a subset of machine learning that uses artificial neural networks (ANNs) with multiple layers—hence the term “deep”—to model and solve complex problems. Unlike traditional machine learning, which often relies on hand-crafted features, deep learning automatically learns features from raw data, making it highly effective for tasks like image recognition, natural language processing (NLP), and speech synthesis.

Key Concepts in Deep Learning

To understand deep learning, let’s break down its core components:

- Artificial Neural Networks (ANNs): These are computational models inspired by the human brain’s neurons. Each neuron processes input, applies a mathematical operation, and passes the output to the next layer.

- Layers:

- Input Layer: Receives raw data (e.g., pixel values of an image).

- Hidden Layers: Perform computations to extract features (e.g., edges in an image). Deep networks have many hidden layers, enabling complex pattern recognition.

- Output Layer: Produces the final result (e.g., a classification like “cat” or “dog”).

- Weights and Biases: Parameters that the network adjusts during training to minimize errors.

- Activation Functions: Introduce non-linearity, allowing the network to model complex relationships. Common functions include ReLU (Rectified Linear Unit), Sigmoid, and Tanh.

- Loss Function: Measures the difference between the network’s predictions and actual outcomes (e.g., mean squared error for regression).

- Backpropagation: An algorithm that adjusts weights and biases to minimize the loss function, using gradient descent.

- Epochs and Batches: Training occurs over multiple iterations (epochs), processing data in smaller chunks (batches) for efficiency.

Deep Learning vs. Traditional Machine Learning

- Feature Engineering: Traditional machine learning requires manual feature selection (e.g., defining edges in images), while deep learning learns features automatically.

- Data Requirements: Deep learning thrives on large datasets, whereas traditional methods can work with smaller data.

- Complexity: Deep learning models are more computationally intensive, requiring GPUs or TPUs for training.

- Applications: Deep learning excels in unstructured data tasks (e.g., images, text), while traditional methods are often sufficient for structured data (e.g., tabular data).

Analogy: Think of deep learning as teaching a child to recognize animals by showing thousands of pictures, letting them figure out features like ears or tails, while traditional machine learning is like giving the child a checklist of features to look for.

How Deep Learning Works

At its core, deep learning involves training a neural network to map inputs to outputs. Here’s a simplified overview of the process:

- Data Preparation: Collect and preprocess data (e.g., normalize pixel values, tokenize text).

- Model Architecture: Design the neural network, specifying the number of layers, neurons, and activation functions.

- Training:

- Feed data through the network to make predictions.

- Calculate the loss (error) between predictions and actual values.

- Use backpropagation to adjust weights and biases, minimizing the loss.

- Repeat over multiple epochs until the model converges.

- Evaluation: Test the model on unseen data to assess performance (e.g., accuracy, F1-score).

- Deployment: Integrate the model into applications for real-world use (e.g., a chatbot or image classifier).

Example: To build an image classifier for cats vs. dogs, you’d feed thousands of labeled images into a convolutional neural network (CNN), train it to recognize features like whiskers or fur patterns, and deploy it to classify new images.

Real-World Applications of Deep Learning

Deep learning’s ability to handle complex, unstructured data has led to breakthroughs across industries. Here are some impactful applications, with examples to illustrate their significance:

1. Computer Vision

What It Is: Deep learning models, particularly convolutional neural networks (CNNs), analyze images or videos to recognize objects, faces, or patterns.

Real-World Example: Tesla’s autonomous vehicles use deep learning to process camera feeds, identifying pedestrians, traffic signs, and lane markings in real-time. CNNs trained on millions of driving images enable the car to navigate safely, as highlighted in Tesla’s 2024 AI Day presentation.

Impact: Computer vision enhances safety in autonomous driving, improves medical imaging (e.g., detecting tumors), and powers facial recognition in security systems.

2. Natural Language Processing (NLP)

What It Is: Deep learning models, such as transformers (e.g., BERT, GPT), process and generate human language for tasks like translation, chatbots, or sentiment analysis.

Real-World Example: Google Translate leverages transformer models to translate text across over 100 languages with near-human accuracy. For instance, a business uses it to translate customer emails from Spanish to English, streamlining global communication.

Impact: NLP improves customer service (e.g., chatbots), automates content creation, and enables cross-lingual collaboration.

3. Speech Recognition

What It Is: Deep learning converts spoken language into text or commands, using recurrent neural networks (RNNs) or transformers.

Real-World Example: Amazon’s Alexa uses deep learning to process voice commands like “Play my favorite playlist” or “Set a timer for 10 minutes.” The model transcribes speech, identifies intent, and triggers actions, as described in Amazon’s 2023 developer blog.

Impact: Speech recognition enhances accessibility (e.g., voice-to-text for the hearing impaired) and productivity (e.g., hands-free operations in warehouses).

4. Healthcare

What It Is: Deep learning analyzes medical data, such as images or patient records, to support diagnosis, treatment, or drug discovery.

Real-World Example: IBM Watson Health uses deep learning to analyze CT scans for early lung cancer detection. CNNs trained on thousands of scans identify subtle patterns, improving detection rates by 20%, according to a 2024 study in The Lancet.

Impact: Deep learning accelerates diagnosis, personalizes treatments, and reduces healthcare costs.

5. Recommendation Systems

What It Is: Deep learning models predict user preferences based on behavior, using collaborative filtering or neural networks.

Real-World Example: Netflix’s recommendation engine uses deep learning to suggest shows based on viewing history, ratings, and user demographics. This personalization drives 80% of content consumption, as reported in Netflix’s 2023 investor report.

Impact: Recommendation systems boost customer engagement and revenue in e-commerce, streaming, and social media.

Prerequisites for Learning Deep Learning

Deep learning is an advanced topic, but with the right foundation, beginners can master it. Here are the key prerequisites, along with resources and steps to build your skills:

1. Programming

Why It Matters: Deep learning involves writing code to build, train, and deploy models.

Key Skills:

- Python: The primary language for deep learning, with libraries like TensorFlow, PyTorch, NumPy, and pandas.

- Basic Syntax: Understand variables, loops, functions, and data structures (e.g., lists, arrays).

- Data Manipulation: Use pandas for cleaning and Matplotlib for visualization.

How to Learn:

- Courses: DataTech Academy’s Python for Data Science or Coursera’s Python for Everybody by University of Michigan.

- Practice: Write a Python script to load a dataset (e.g., Kaggle’s Iris dataset) and visualize it with Matplotlib.

Example Code (Loading and Visualizing Data):

| import pandas as pd import matplotlib.pyplot as plt # Load Iris dataset df = pd.read_csv(‘iris.csv’) plt.scatter(df[‘sepal_length’], df[‘sepal_width’], c=df[‘species’].astype(‘category’).cat.codes) plt.xlabel(‘Sepal Length’) plt.ylabel(‘Sepal Width’) plt.title(‘Iris Dataset Visualization’) plt.show() |

2. Mathematics

Why It Matters: Deep learning relies on mathematical concepts to understand how models learn and optimize.

Key Skills:

- Linear Algebra: Vectors, matrices, and operations like dot products, essential for understanding neural network computations.

- Calculus: Gradients and optimization techniques (e.g., gradient descent) for minimizing loss functions.

- Probability and Statistics: Probability distributions, hypothesis testing, and metrics like accuracy or F1-score for model evaluation.

How to Learn: - Courses: Khan Academy’s Linear Algebra and Calculus courses or MIT OpenCourseWare’s Statistics for Applications.

- Practice: Implement gradient descent in Python to optimize a simple function.

Example Code (Gradient Descent):

| import numpy as np def gradient_descent(start, learning_rate, n_iterations): x = start for _ in range(n_iterations): gradient = 2 * x # Derivative of f(x) = x^2 x = x – learning_rate * gradient return x # Find minimum of f(x) = x^2 result = gradient_descent(start=10, learning_rate=0.1, n_iterations=100) print(f”Minimum found at x = {result}”) |

3. Machine Learning Basics

Why It Matters: Deep learning builds on machine learning concepts like supervised and unsupervised learning.

Key Skills:

- Understand regression, classification, and clustering.

- Familiarity with evaluation metrics (e.g., accuracy, precision, recall).

- Knowledge of overfitting, underfitting, and techniques like cross-validation.

How to Learn: - Courses: Coursera’s Machine Learning by Andrew Ng.

- Practice: Build a simple linear regression model using scikit-learn.

Example Code (Linear Regression):

| from sklearn.linear_model import LinearRegression from sklearn.model_selection import train_test_split import pandas as pd # Load dataset df = pd.read_csv(‘data.csv’) X = df[[‘feature1’]] y = df[‘target’] X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train model model = LinearRegression() model.fit(X_train, y_train) print(f”Model accuracy: {model.score(X_test, y_test)}”) |

4. Data Science Tools

Why It Matters: Deep learning projects require tools for data handling, model building, and visualization.

Key Skills:

- NumPy: For numerical operations and array manipulation.

- pandas: For data cleaning and preprocessing.

- Matplotlib/Seaborn: For visualizing data and model performance.

- Jupyter Notebooks: For interactive coding and experimentation.

How to Learn: - Courses: DataCamp’s Data Scientist with Python track.

- Practice: Use Jupyter Notebook to preprocess a dataset and visualize correlations.

Example Code (Correlation Heatmap):

| import seaborn as sns import pandas as pd # Load dataset df = pd.read_csv(‘data.csv’) correlation_matrix = df.corr() sns.heatmap(correlation_matrix, annot=True, cmap=’coolwarm’) plt.title(‘Correlation Heatmap’) plt.show() |

Tools and Frameworks for Deep Learning

To build and deploy deep learning models, you’ll need to familiarize yourself with popular Python-based frameworks and tools. Here are the most widely used ones:

- TensorFlow

What It Is: An open-source framework by Google for building and deploying deep learning models.

Key Features:- Supports both CPU and GPU computation.

- Keras API for high-level model building.

- Scalable for production environments.

Use Case: Build a convolutional neural network (CNN) for image classification.

Example Code (Simple CNN):

| from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Conv2D, MaxPooling2D, Flatten model = Sequential([ Conv2D(32, (3, 3), activation=’relu’, input_shape=(64, 64, 3)), MaxPooling2D((2, 2)), Flatten(), Dense(10, activation=’softmax’) ]) model.compile(optimizer=’adam’, loss=’categorical_crossentropy’, metrics=[‘accuracy’]) |

2. PyTorch

What It Is: An open-source framework by Facebook, known for its flexibility and dynamic computation graphs.

Key Features:

- Ideal for research and prototyping.

- Easy debugging with Python-like syntax.

- Strong support for GPU acceleration.

Use Case: Train a recurrent neural network (RNN) for text generation.

Example Code (Simple RNN):

| import torch import torch.nn as nn class RNN(nn.Module): def __init__(self, input_size, hidden_size, output_size): super(RNN, self).__init__() self.rnn = nn.RNN(input_size, hidden_size, batch_first=True) self.fc = nn.Linear(hidden_size, output_size) def forward(self, x): out, _ = self.rnn(x) out = self.fc(out[:, -1, :]) return out |

3. Other Tools

- scikit-learn: For preprocessing and baseline machine learning models.

- Hugging Face Transformers: For NLP tasks like text classification or generation.

- OpenCV: For image processing in computer vision tasks.

How to Learn: Explore official documentation or tutorials on platforms like Towards Data Science or PyTorch’s official website.

Challenges in Deep Learning

While powerful, deep learning comes with challenges that beginners should be aware of:

- Data Requirements: Deep learning models require large, high-quality datasets. For example, training a CNN for image classification might need thousands of labeled images.

Solution: Use data augmentation (e.g., flipping images) or transfer learning (e.g., fine-tuning a pre-trained model like ResNet). - Computational Resources: Training deep models is resource-intensive, often requiring GPUs or cloud services.

Solution: Use cloud platforms like Google Colab or AWS, which offer free or affordable GPU access. - Overfitting: Models may perform well on training data but poorly on unseen data.

Solution: Apply regularization (e.g., dropout, L2 regularization) and use validation datasets.

Example Code (Dropout in Keras):

| from tensorflofrom tensorflow.keras.layers import Dropout model = Sequential([ Dense(64, activation=’relu’, input_shape=(100,)), Dropout(0.5), Dense(10, activation=’softmax’) ]) |

- Hyperparameter Tuning: Choosing the right learning rate, batch size, or number of layers can be tricky.

Solution: Use grid search or libraries like Optuna for automated tuning. - Interpretability: Deep learning models are often seen as “black boxes,” making it hard to understand their decisions.

Solution: Use tools like SHAP or LIME for model interpretability.

Future Trends in Deep Learning

Deep learning continues to evolve, with exciting trends shaping its future:

- Efficient Models: Techniques like model pruning and quantization reduce model size and speed up inference, making deep learning accessible on edge devices like smartphones.

- Self-Supervised Learning: Models like CLIP learn from unlabeled data, reducing the need for large labeled datasets.

- AI Ethics and Fairness: Efforts are underway to address bias in models, ensuring fair outcomes in applications like hiring or lending.

- Federated Learning: Enables training models across decentralized devices while preserving privacy, useful in healthcare and IoT.

- Multimodal Models: Combining text, images, and audio (e.g., DALL-E, GPT-4) for richer applications like generative AI.

Example Code (Using Hugging Face for Text Classification):

| from transformers import pipeline classifier = pipeline(‘sentiment-analysis’) result = classifier(“I love learning deep learning!”) print(result) # Output: [{‘label’: ‘POSITIVE’, ‘score’: 0.999}] |

Conclusion

Deep learning is a powerful and transformative field, enabling machines to tackle tasks once thought exclusive to humans, from recognizing images to generating human-like text. For beginners, the journey starts with mastering Python programming, understanding mathematical foundations, and exploring machine learning basics. By leveraging tools like TensorFlow, PyTorch, and pandas, and tackling challenges like overfitting or resource constraints, anyone can build and deploy deep learning models. As the field evolves with trends like self-supervised learning and AI ethics, the opportunities for innovation are boundless. Start small—write a Python script, experiment with a dataset, or train a simple neural network—and let curiosity guide your path into the exciting world of deep learning.